LLM in Action: Article Summarization with LangChain and Ollama

9 minutes read

OllamaLLM is a class from LangChain designed to integrate with Ollama, a platform providing generative AI models. It serves as a connector to use Ollama's models within LangChain’s ecosystem, allowing developers to build applications leveraging natural language processing (NLP) capabilities.

How to run Ollama and Langchain in Google Colab?

The command !pip install ollama in Google Colab is used to install the ollama package (if it’s available) into the Colab environment. This allows you to use ollama and its associated functionality directly from Python code in your Colab notebook.

!pip install ollama

Output:

Requirement already satisfied: ollama in /usr/local/lib/python3.10/dist-packages (0.4.2)

Requirement already satisfied: httpx<0.28.0,>=0.27.0 in /usr/local/lib/python3.10/dist-packages (from ollama) (0.27.2)

Requirement already satisfied: pydantic<3.0.0,>=2.9.0 in /usr/local/lib/python3.10/dist-packages (from ollama) (2.9.2)

Requirement already satisfied: anyio in /usr/local/lib/python3.10/dist-packages (from httpx<0.28.0,>=0.27.0->ollama) (3.7.1)

Requirement already satisfied: certifi in /usr/local/lib/python3.10/dist-packages (from httpx<0.28.0,>=0.27.0->ollama) (2024.8.30)

Requirement already satisfied: httpcore==1.* in /usr/local/lib/python3.10/dist-packages (from httpx<0.28.0,>=0.27.0->ollama) (1.0.7)

Requirement already satisfied: idna in /usr/local/lib/python3.10/dist-packages (from httpx<0.28.0,>=0.27.0->ollama) (3.10)

Requirement already satisfied: sniffio in /usr/local/lib/python3.10/dist-packages (from httpx<0.28.0,>=0.27.0->ollama) (1.3.1)

Requirement already satisfied: h11<0.15,>=0.13 in /usr/local/lib/python3.10/dist-packages (from httpcore==1.*->httpx<0.28.0,>=0.27.0->ollama) (0.14.0)

Requirement already satisfied: annotated-types>=0.6.0 in /usr/local/lib/python3.10/dist-packages (from pydantic<3.0.0,>=2.9.0->ollama) (0.7.0)

Requirement already satisfied: pydantic-core==2.23.4 in /usr/local/lib/python3.10/dist-packages (from pydantic<3.0.0,>=2.9.0->ollama) (2.23.4)

Requirement already satisfied: typing-extensions>=4.6.1 in /usr/local/lib/python3.10/dist-packages (from pydantic<3.0.0,>=2.9.0->ollama) (4.12.2)

Requirement already satisfied: exceptiongroup in /usr/local/lib/python3.10/dist-packages (from anyio->httpx<0.28.0,>=0.27.0->ollama) (1.2.2)

Then you will need to install Langchain that integration with Ollama package in your environment by using following command.

%pip install -U langchain-ollama

Output:

Collecting langchain-ollama

Downloading langchain_ollama-0.2.0-py3-none-any.whl.metadata (1.8 kB)

Requirement already satisfied: langchain-core<0.4.0,>=0.3.0 in /usr/local/lib/python3.10/dist-packages (from langchain-ollama) (0.3.19)

Requirement already satisfied: ollama<1,>=0.3.0 in /usr/local/lib/python3.10/dist-packages (from langchain-ollama) (0.4.1)

Requirement already satisfied: PyYAML>=5.3 in /usr/local/lib/python3.10/dist-packages (from langchain-core<0.4.0,>=0.3.0->langchain-ollama) (6.0.2)

Requirement already satisfied: jsonpatch<2.0,>=1.33 in /usr/local/lib/python3.10/dist-packages (from langchain-core<0.4.0,>=0.3.0->langchain-ollama) (1.33)

Requirement already satisfied: langsmith<0.2.0,>=0.1.125 in /usr/local/lib/python3.10/dist-packages (from langchain-core<0.4.0,>=0.3.0->langchain-ollama) (0.1.143)

Requirement already satisfied: packaging<25,>=23.2 in /usr/local/lib/python3.10/dist-packages (from langchain-core<0.4.0,>=0.3.0->langchain-ollama) (24.2)

Requirement already satisfied: pydantic<3.0.0,>=2.5.2 in /usr/local/lib/python3.10/dist-packages (from langchain-core<0.4.0,>=0.3.0->langchain-ollama) (2.9.2)

Requirement already satisfied: tenacity!=8.4.0,<10.0.0,>=8.1.0 in /usr/local/lib/python3.10/dist-packages (from langchain-core<0.4.0,>=0.3.0->langchain-ollama) (9.0.0)

Requirement already satisfied: typing-extensions>=4.7 in /usr/local/lib/python3.10/dist-packages (from langchain-core<0.4.0,>=0.3.0->langchain-ollama) (4.12.2)

Requirement already satisfied: httpx<0.28.0,>=0.27.0 in /usr/local/lib/python3.10/dist-packages (from ollama<1,>=0.3.0->langchain-ollama) (0.27.2)

Requirement already satisfied: anyio in /usr/local/lib/python3.10/dist-packages (from httpx<0.28.0,>=0.27.0->ollama<1,>=0.3.0->langchain-ollama) (3.7.1)

Requirement already satisfied: certifi in /usr/local/lib/python3.10/dist-packages (from httpx<0.28.0,>=0.27.0->ollama<1,>=0.3.0->langchain-ollama) (2024.8.30)

Requirement already satisfied: httpcore==1.* in /usr/local/lib/python3.10/dist-packages (from httpx<0.28.0,>=0.27.0->ollama<1,>=0.3.0->langchain-ollama) (1.0.7)

Requirement already satisfied: idna in /usr/local/lib/python3.10/dist-packages (from httpx<0.28.0,>=0.27.0->ollama<1,>=0.3.0->langchain-ollama) (3.10)

Requirement already satisfied: sniffio in /usr/local/lib/python3.10/dist-packages (from httpx<0.28.0,>=0.27.0->ollama<1,>=0.3.0->langchain-ollama) (1.3.1)

Requirement already satisfied: h11<0.15,>=0.13 in /usr/local/lib/python3.10/dist-packages (from httpcore==1.*->httpx<0.28.0,>=0.27.0->ollama<1,>=0.3.0->langchain-ollama) (0.14.0)

Requirement already satisfied: jsonpointer>=1.9 in /usr/local/lib/python3.10/dist-packages (from jsonpatch<2.0,>=1.33->langchain-core<0.4.0,>=0.3.0->langchain-ollama) (3.0.0)

Requirement already satisfied: orjson<4.0.0,>=3.9.14 in /usr/local/lib/python3.10/dist-packages (from langsmith<0.2.0,>=0.1.125->langchain-core<0.4.0,>=0.3.0->langchain-ollama) (3.10.11)

Requirement already satisfied: requests<3,>=2 in /usr/local/lib/python3.10/dist-packages (from langsmith<0.2.0,>=0.1.125->langchain-core<0.4.0,>=0.3.0->langchain-ollama) (2.32.3)

Requirement already satisfied: requests-toolbelt<2.0.0,>=1.0.0 in /usr/local/lib/python3.10/dist-packages (from langsmith<0.2.0,>=0.1.125->langchain-core<0.4.0,>=0.3.0->langchain-ollama) (1.0.0)

Requirement already satisfied: annotated-types>=0.6.0 in /usr/local/lib/python3.10/dist-packages (from pydantic<3.0.0,>=2.5.2->langchain-core<0.4.0,>=0.3.0->langchain-ollama) (0.7.0)

Requirement already satisfied: pydantic-core==2.23.4 in /usr/local/lib/python3.10/dist-packages (from pydantic<3.0.0,>=2.5.2->langchain-core<0.4.0,>=0.3.0->langchain-ollama) (2.23.4)

Requirement already satisfied: charset-normalizer<4,>=2 in /usr/local/lib/python3.10/dist-packages (from requests<3,>=2->langsmith<0.2.0,>=0.1.125->langchain-core<0.4.0,>=0.3.0->langchain-ollama) (3.4.0)

Requirement already satisfied: urllib3<3,>=1.21.1 in /usr/local/lib/python3.10/dist-packages (from requests<3,>=2->langsmith<0.2.0,>=0.1.125->langchain-core<0.4.0,>=0.3.0->langchain-ollama) (2.2.3)

Requirement already satisfied: exceptiongroup in /usr/local/lib/python3.10/dist-packages (from anyio->httpx<0.28.0,>=0.27.0->ollama<1,>=0.3.0->langchain-ollama) (1.2.2)

Downloading langchain_ollama-0.2.0-py3-none-any.whl (14 kB)

Installing collected packages: langchain-ollama

Successfully installed langchain-ollama-0.2.0

-

%pip:- In Jupyter and Colab notebooks,

%pipis a "magic" command used to run pip commands directly within a cell, similar to running!pipin a terminal or Colab. This ensures that the installation happens within the notebook environment.

- In Jupyter and Colab notebooks,

-

install:- This part of the command tells pip to install the specified package (in this case,

langchain-ollama).

- This part of the command tells pip to install the specified package (in this case,

-

-U:- The

-U(or--upgrade) flag tells pip to upgrade the package to the latest version, if an older version is already installed. If the package isn’t installed, it will simply install the latest version.

- The

-

langchain-ollama:- This is the name of the package you are installing or upgrading. It's likely a specific integration between LangChain (a popular framework for building applications with language models) and Ollama (a tool or service related to AI or language models).

- By installing this package, you are making the LangChain functionality available in your environment, specifically tailored to work with Ollama models or services.

To enable and use a terminal shell in a Google Colab notebook using the colab-xterm package. We have to run the following command in a code cell to install

!pip install colab-xterm

Output:

Collecting colab-xterm

Downloading colab_xterm-0.2.0-py3-none-any.whl.metadata (1.2 kB)

Requirement already satisfied: ptyprocess~=0.7.0 in /usr/local/lib/python3.10/dist-packages (from colab-xterm) (0.7.0)

Requirement already satisfied: tornado>5.1 in /usr/local/lib/python3.10/dist-packages (from colab-xterm) (6.3.3)

Downloading colab_xterm-0.2.0-py3-none-any.whl (115 kB)

━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━━ 115.6/115.6 kB 2.5 MB/s eta 0:00:00

Installing collected packages: colab-xterm

Successfully installed colab-xterm-0.2.0

After installing it, run following command in a new notebook cell to start the terminal.

%load_ext colabxterm

Then

%xterm

You will see the terminal launch in Colab, then place the command to downloads the llama3.2 model to your local system.

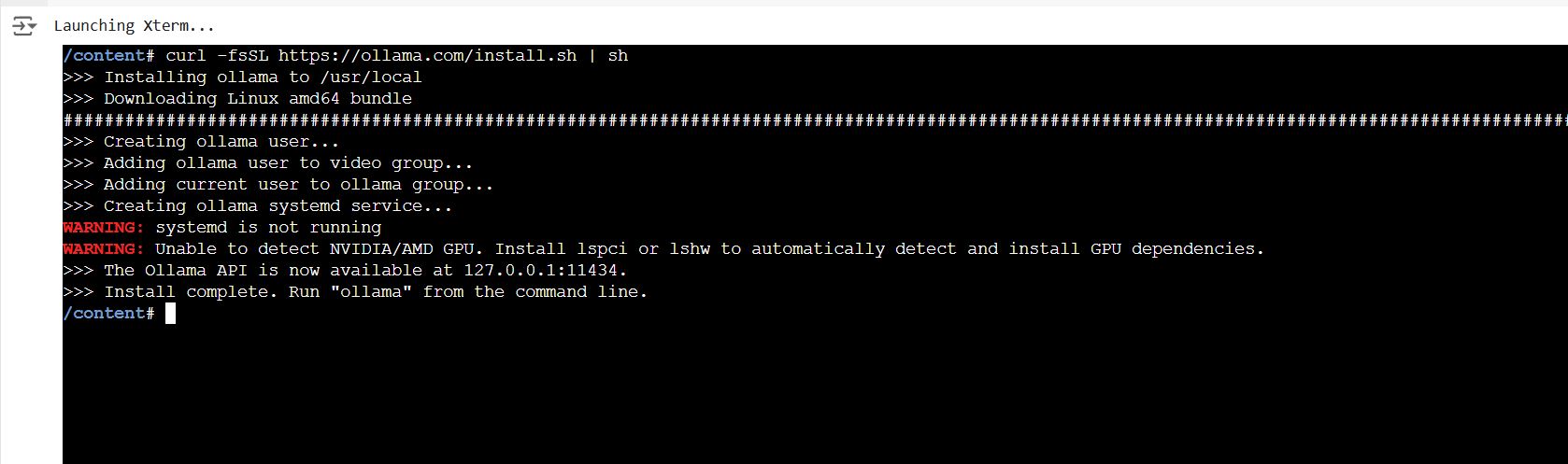

Install the Ollama CLI: Use the command:

curl -fsSL https://ollama.com/install.sh | sh

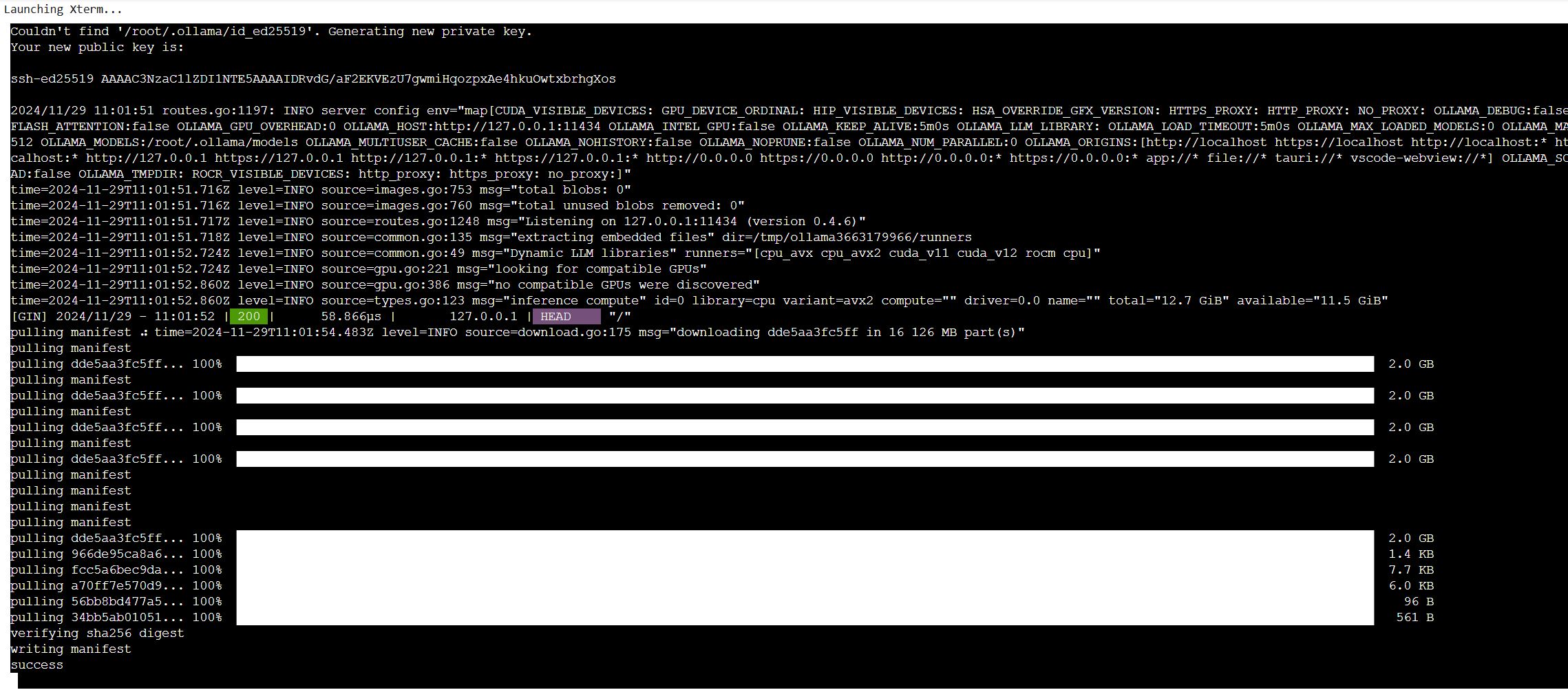

Download a Model: After installation, you can use:

ollama pull llama3.2 & ollama serve

This downloads the llama3.2 model from Ollama's servers to your local machine and serve it locally. Then use this command to integrate Ollama models directly into your Python scripts or applications.

Time to experiment

The below, we will demonstrates how to use LangChain with Ollama's Llama model to create a pipeline (or "chain") for summarizing an article.

1. Import Required Classes

from langchain_core.prompts import ChatPromptTemplate

from langchain_ollama.llms import OllamaLLM

Explain:

ChatPromptTemplate: Used to define a template for the input prompt. It helps structure input in a consistent format.OllamaLLM: Represents the connection to an Ollama-served LLM (likellama3.2) running locally.

2. Define the Prompt Template

template = """Summarize the following article in one concise paragraph: {article} Return the summary as a JSON object with the format {{'summary':...}} without additional text """

prompt = ChatPromptTemplate.from_template(template)

Explain:

template: Specifies the task to the model. The input text will be substituted where{article}appears.- The model is instructed to:

- Summarize the provided article in one concise paragraph.

- Return the result as a JSON object with the key

summary. - Avoid including extra text outside the JSON format.

ChatPromptTemplate.from_template: Creates a prompt object from the provided string template.

3. Connect to the Ollama Model

model = OllamaLLM(model="llama3.2", api_url="http://localhost:11411")

Explain:

model="llama3.2": Specifies the model to use (e.g.,llama3.2).api_url="http://localhost:11411": Points to the local Ollama server hosting the model.

4. Create the Chain

chain = prompt | model

Explain:

- The

|operator combines thepromptwith themodelinto a pipeline (chain). - This pipeline takes the formatted prompt and sends it to the model for inference.

5. Define the Article

my_article = """

Bitcoin (BTC) is a cryptocurrency (a virtual currency) designed to act as money and a form of payment outside the control of any one person...

"""

6. Invoke the Chain

result = chain.invoke({"article": my_article})

print(result)

Explain:

chain.invoke: Executes the chain. The{"article": my_article}dictionary substitutes the{article}variable in the prompt template.- The chain sends the formatted prompt to the model, and the model returns the summary.

Example model's response

{'summary': 'Bitcoin is a decentralized cryptocurrency introduced in 2009 that eliminates the need for third-party involvement in transactions. It has become the largest and most influential cryptocurrency, with a market cap that affects the entire crypto market.'}

Full snippet:

from langchain_core.prompts import ChatPromptTemplate

from langchain_ollama.llms import OllamaLLM

template = """Summarize the following article in one concise paragraph: {article} Return the summary as a JSON object with the format {{'summary':...}} without additional text """

prompt = ChatPromptTemplate.from_template(template)

model = OllamaLLM(model="llama3.2",api_url="http://localhost:11411")

chain = prompt | model

my_article = """

Bitcoin (BTC) is a cryptocurrency (a virtual currency) designed to act as money and a form of payment outside the control of any one person, group, or entity.

This removes the need for trusted third-party involvement (e.g., a mint or bank) in financial transactions.

Bitcoin was introduced to the public in 2009 by an anonymous developer or group of developers using the name Satoshi Nakamoto.

It has since become the most well-known and largest cryptocurrency in the world. Its popularity has inspired the development of many other cryptocurrencies.

Its price rose from roughly $73,000 on Election Day to an all-time high of over $98,000 early Thursday morning, according to CoinGecko data.

It's gone up nearly 8% in the past week, over 41% in the past month, and up 141% in the past year. Cryptocurrency prices are notoriously volatile.

But the larger a coin's market cap, the more money it takes to spike or dump its price.

Bitcoin is the largest cryptocurrency by market cap and the oldest, and its price movements can influence the rest of the crypto market.

"""

result = chain.invoke({"article": my_article})

print(result)

Make sure you have run Ollama successfully, so in line 23 won't create an error.

Author

As the founder and passionate educator behind this platform, I’m dedicated to sharing practical knowledge in programming to help you grow. Whether you’re a beginner exploring Machine Learning, PHP, Laravel, Python, Java, or Android Development, you’ll find tutorials here that are simple, accessible, and easy to understand. My mission is to make learning enjoyable and effective for everyone. Dive in, start learning, and don’t forget to follow along for more tips and insights!. Follow him

Search

Tags

Popular Articles

-

LLM in Action: Article Summarization with LangChain and Ollama

1.02K -

Exploring Large Language Models: Examples, Use Cases, and Applications

920 -

Step-by-Step Guide to NLP Basics: Text Preprocessing with Python

586 -

Reading an Image in OpenCV using Python - FAQs

473 -

Step-by-Step Guide to NLP Basics: Text Preprocessing with Python Part 2

362